Introduction to Kubernetes#

The AI on Demand platform leverages Kubernetes for deploying the pipeline. While the platform automatically generates all the required files for this process, eliminating the need for you to manually create the Kubernetes deployment files, it is still beneficial to have a foundational understanding of Kubernetes. This knowledge will enhance your comprehension of the deployment process and simplify any debugging efforts.

What is Container Orchestration?#

Container orchestration is like the conductor of an orchestra. It manages and coordinates the deployment, scaling, and operation of containers, which are lightweight, standalone, and portable software packages that contain everything needed to run an application. Kubernetes is an open-source software for container orchestration and is currently the most commonly used one.

Kubernetes structure#

Kubernetes works by organizing things into clusters, which are groups of computers called nodes. Each node is like a member of the team, doing its part to run applications. Inside these nodes, Kubernetes manages units called pods. Pods are like small units that hold one or more tightly related containers, each containing a separate part of your application. These containers are where your actual applications run. So, imagine it like this: a cluster is a group of computers (nodes), each computer hosts little units (pods), and inside these units are the actual containers where your applications live. Kubernetes keeps everything coordinated and running smoothly, just like a conductor leading an orchestra

Clusters: The Backbone of Container Orchestration#

A cluster is a group of interconnected computers, often referred to as nodes or hosts, that work together to perform a unified task. In the context of container orchestration, a cluster typically consists of multiple machines (physical or virtual) that are connected over a network and managed as a single unit.

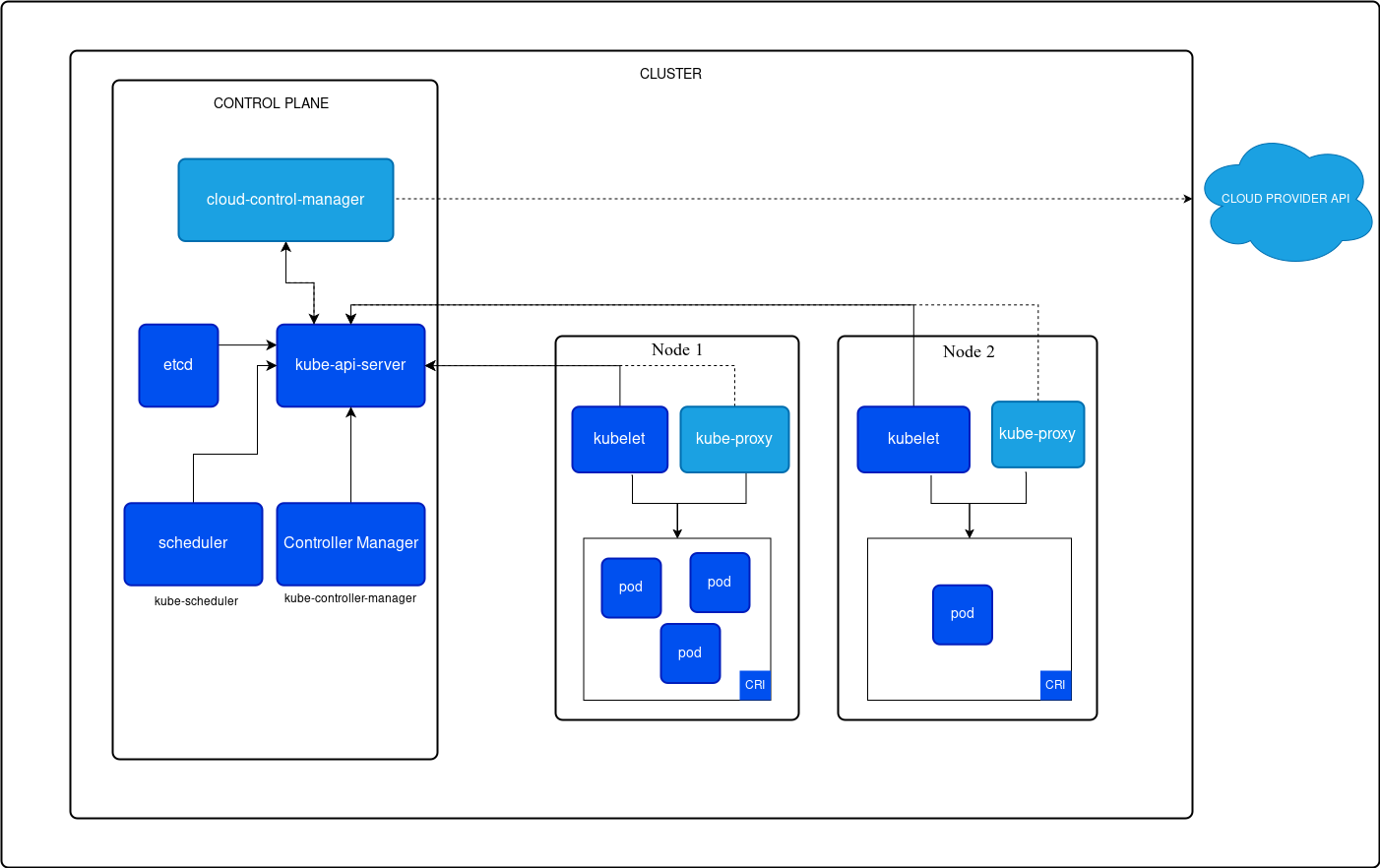

A cluster in Kubernetes typically consists of two main components: the control plane (or master node) and one or more worker nodes. The control plane manages the entire cluster and makes global decisions about the cluster state. It includes components like the API server, scheduler, controller manager and etcd. On the other hand, worker nodes are where the actual workloads run. They host the pods and containers that make up your applications. So, in a nutshell, a Kubernetes cluster contains both the brains (control plane) and the muscle (worker nodes) needed to manage and run your applications.

Role of Clusters in Container Orchestration:#

Resource Pooling: Clusters pool together the computing resources (such as CPU, memory, and storage) of individual machines, providing a larger pool of resources for running containerized applications. This pooling allows for efficient utilization of resources across the cluster.

High Availability: Clusters enhance the availability of containerized applications by distributing them across multiple nodes within the cluster. If one node fails, the applications can be automatically rescheduled and restarted on other healthy nodes, ensuring continuous operation.

Scalability: Clusters facilitate horizontal scalability by allowing you to add or remove nodes dynamically based on workload demands. This enables applications to scale up or down seamlessly to handle varying levels of traffic or processing requirements.

Load Balancing: Clusters often include built-in load balancing capabilities that distribute incoming traffic across multiple nodes to prevent any single node from becoming overwhelmed. This ensures that applications remain responsive and available, even during periods of high demand.

Fault Tolerance: Clusters are designed to be fault-tolerant, meaning they can continue operating even if individual nodes or components fail. They typically employ mechanisms such as replication, redundancy, and automated recovery to minimize the impact of failures on running applications.

Centralized Management: Clusters provide a centralized management interface for deploying, monitoring, and managing containerized applications across the entire cluster. This simplifies administrative tasks and ensures consistency and uniformity in the management of resources and workloads.

In summary, clusters play a crucial role in container orchestration by providing a scalable, resilient, and efficient infrastructure for running and managing containerized applications.

Advantages of Container Orchestration#

Container orchestration platforms serve as the backbone of modern application management, simplifying complex tasks and ensuring the seamless operation of web applications. By automating essential functions, these platforms ensure the robust execution of applications. These are some of the concrete functionalities of container orchestration:

Efficient Deployment: Orchestration automates the process of deploying containers, making it faster and more reliable. It ensures that applications are deployed consistently across different environments.

Scaling: Orchestration platforms allow you to scale your applications seamlessly by adding or removing containers based on demand. This helps maintain optimal performance and resource utilization.

High Availability: Orchestration ensures that your applications remain available even if there are failures in the underlying infrastructure. It automatically detects and replaces failed containers to minimize downtime.

Resource Optimization: Orchestration platforms can optimize resource usage by scheduling containers on available resources and consolidating workloads. This helps reduce costs and maximize efficiency.

Service Discovery and Networking: Orchestration provides tools for service discovery and networking, allowing containers to communicate with each other efficiently and securely, regardless of their location or IP address.